PowerShell is the solution for task automation. It has everything you need to iterate fast. A command-line shell with auto-completion, a scripting language that is object oriented and it runs on Windows, Linux, and macOS. PowerShell’s command-line shell is the best in class with tab completion, and even command prediction, aliases, in-console help (Unix man pages anyone) and my favorite feature Pipelines that lets you chaim commands together. You can even debug directly in the shell! If you are new to PowerShell start here.

Dataverse on the other hand is a managed relational database and a lot more. It hosts Dynamics 365’s data, provides rich metadata, and can even host logic and validation with a range of its own productivity tools. PowerShell can be handy to automate tasks, and orchestrate one-off solutions in Power Platform. From another perspective Dataverse can be usefull as a managed database. You don’t need a licese if the user is not a human, by the way.

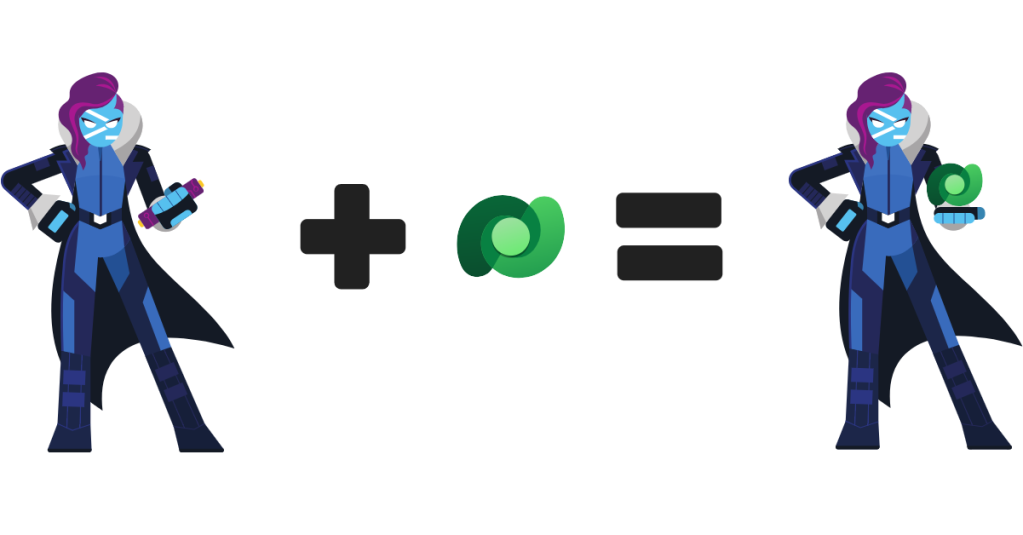

While working with Power Platform, often time you migh need to quicky try something to see if it works, or to just check some data real quick. Other times you might need to automate some tasks or integrate processes that are not exposed through the UI. Take data migration as an example, or simply loading a a couple of huge CSV files with some data transformation. Are you going to build a fully custom .NET solution just for that? or are you going to use a full-fledged SSIS solution with its notoriously troublesome Script components and non-Git friendly projects? you other options are learning 3rd-party one size fits all cloud-based services with their edge cases. Well, now you have a 3rd option. PSDataverse, a PowerShell module that brings Dataverse to PowerShell.

Show me an example

Let’s check how easy it is to load a CSV file called Account.CSV to Microsoft Dynamics 365.

Connect-Dataverse "authority=https://login.microsoftonline.com/<tenant-id>/oauth2/authorize;clientid=<client-id>;thumbprint=<client-cert-thumbprint>;resource=https://<environment>.crm4.dynamics.com/"

Import-Csv "Account.csv" | ForEach-Object {

@{ Uri = "account", Method= "PATCH", Value = @{

name = $_.AccountName,

overridencreatedon = $_.CreationDate,

...}}} | ConvertTo-Json | Send-DataverseOperation

You know, that technically is two lines of code in PowerShell. Sure, I’m cheating here. I have piped four commands together, but that makes it even easier to read. Let’s go over what I’m doing here.

- The first line is obviously using Connect-Dataverse command to connect to Dataverse using a client certificate.

- The second line (which I have broken into multiple lines for clarity):

- Imports a CSV file called Account.csv.

- Transforms each line into a Dataverse operation using a ForEach-Object.

- Converts operations to JSON using ConvertTo-Json command.

- Sends the operations to Dataverse

A Power Platform professional might say sending several operations one-by-one will not be ideal in terms of performance. I do agree with that statement. Send-DataverseOperation has two parameters for just that.

Improving performance

Dataverse provides two features to improve performance over its API for when needed. The first one is parallelism. Each user can send data in parallel up to a configurable (by Microsoft) number of lanes. By default the number is 20, but you can ask Microsoft to increase it for when needed (e.g. data migration). The other one is called batching. In order to use it, you’ll need to put several operations in a single HTTP request with a special syntax and structure and send it to the API, where it will be taken apart and executed one-by-one. All the operations will run in a single transaction however. In other words they succeed or fail (and rolled back) together. To use these two features, Send-DataverseOperation provides two parameters.

-BatchCapacitya number between 0 (default) and 1000 that defines the maximum number of operations that can be put in a single batch.-MaxDopa number between 1 (default) and 1000 that indicates how many data lanes will be used to send data in parallel.

As you see, the default values indicate that batching will be off and only one lane will be used by default. This makes experimentation easier and safer by default. For example, running the following command:

@{Uri="WhoAmI"} | Send-DataverseOperation

Will give you BusinessUnitId, UserId, and OrganizationId of the current user.

@odata.context : https://helloworld.crm4.dynamics.com/api/data/v9.2/$metadata#Microsoft.Dynamics.CRM.WhoA

mIResponse

BusinessUnitId : 6f202e6c-e471-ec11-8941-000d3adf0002

UserId : 88057198-a9b1-ec11-9840-00567ab5c181

OrganizationId : e34c95a5-f34c-430c-a05e-a23437e5b9faWhat’s more

There is a lot more to PSDataverse that what has been covered above. The best way to know it is to download PSDataverse from its GitHub repository and try it. There are several features in its core like automaticall transient fault detection and retry. Automatically adopting the pace based on hints provided by Dataverse and more.

Keep in mind that PSDataverse is a free, open-source PowerShell module and it can benefit from your suggestions, bug reports and contributions. Currently yhe module is in alpha, but I have already used it in a data migration project for a major bank in Europe and I try to use it for different tasks as frequent as I can to enhance it to fit more and more use cases and I put developer productivity above all.

Leave a comment